注意

转到末尾 下载完整的示例代码。

使用 XGBoost 模型转换管道¶

sklearn-onnx 只将 scikit-learn 模型转换为 ONNX,但许多库实现了 scikit-learn API,以便将它们的模型包含在 scikit-learn 管道中。本示例考虑一个包含 XGBoost 模型的管道。只要 sklearn-onnx 知道与 XGBClassifier 关联的转换器,它就可以转换整个管道。让我们看看如何实现这一点。

训练 XGBoost 分类器¶

import os

import numpy

import matplotlib.pyplot as plt

import onnx

from onnx.tools.net_drawer import GetPydotGraph, GetOpNodeProducer

import onnxruntime as rt

import sklearn

from sklearn.datasets import load_iris

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

import xgboost

from xgboost import XGBClassifier

import skl2onnx

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx import convert_sklearn, update_registered_converter

from skl2onnx.common.shape_calculator import (

calculate_linear_classifier_output_shapes,

)

import onnxmltools

from onnxmltools.convert.xgboost.operator_converters.XGBoost import (

convert_xgboost,

)

import onnxmltools.convert.common.data_types

data = load_iris()

X = data.data[:, :2]

y = data.target

ind = numpy.arange(X.shape[0])

numpy.random.shuffle(ind)

X = X[ind, :].copy()

y = y[ind].copy()

pipe = Pipeline([("scaler", StandardScaler()), ("lgbm", XGBClassifier(n_estimators=3))])

pipe.fit(X, y)

# The conversion fails but it is expected.

try:

convert_sklearn(

pipe,

"pipeline_xgboost",

[("input", FloatTensorType([None, 2]))],

target_opset={"": 12, "ai.onnx.ml": 2},

)

except Exception as e:

print(e)

# The error message tells no converter was found

# for XGBoost models. By default, *sklearn-onnx*

# only handles models from *scikit-learn* but it can

# be extended to every model following *scikit-learn*

# API as long as the module knows there exists a converter

# for every model used in a pipeline. That's why

# we need to register a converter.

Unable to find a shape calculator for type '<class 'xgboost.sklearn.XGBClassifier'>'.

It usually means the pipeline being converted contains a

transformer or a predictor with no corresponding converter

implemented in sklearn-onnx. If the converted is implemented

in another library, you need to register

the converted so that it can be used by sklearn-onnx (function

update_registered_converter). If the model is not yet covered

by sklearn-onnx, you may raise an issue to

https://github.com/onnx/sklearn-onnx/issues

to get the converter implemented or even contribute to the

project. If the model is a custom model, a new converter must

be implemented. Examples can be found in the gallery.

注册 XGBClassifier 的转换器¶

转换器实现在 onnxmltools 中: onnxmltools…XGBoost.py。以及形状计算器: onnxmltools…Classifier.py。

然后我们导入转换器和形状计算器。

现在注册新的转换器。

update_registered_converter(

XGBClassifier,

"XGBoostXGBClassifier",

calculate_linear_classifier_output_shapes,

convert_xgboost,

options={"nocl": [True, False], "zipmap": [True, False, "columns"]},

)

再次转换¶

model_onnx = convert_sklearn(

pipe,

"pipeline_xgboost",

[("input", FloatTensorType([None, 2]))],

target_opset={"": 12, "ai.onnx.ml": 2},

)

# And save.

with open("pipeline_xgboost.onnx", "wb") as f:

f.write(model_onnx.SerializeToString())

比较预测¶

使用 XGBoost 进行预测。

print("predict", pipe.predict(X[:5]))

print("predict_proba", pipe.predict_proba(X[:1]))

predict [1 2 0 0 2]

predict_proba [[0.16737716 0.48578635 0.34683657]]

使用 onnxruntime 的预测。

sess = rt.InferenceSession("pipeline_xgboost.onnx", providers=["CPUExecutionProvider"])

pred_onx = sess.run(None, {"input": X[:5].astype(numpy.float32)})

print("predict", pred_onx[0])

print("predict_proba", pred_onx[1][:1])

predict [1 2 0 0 2]

predict_proba [{0: 0.16737714409828186, 1: 0.4857862889766693, 2: 0.34683650732040405}]

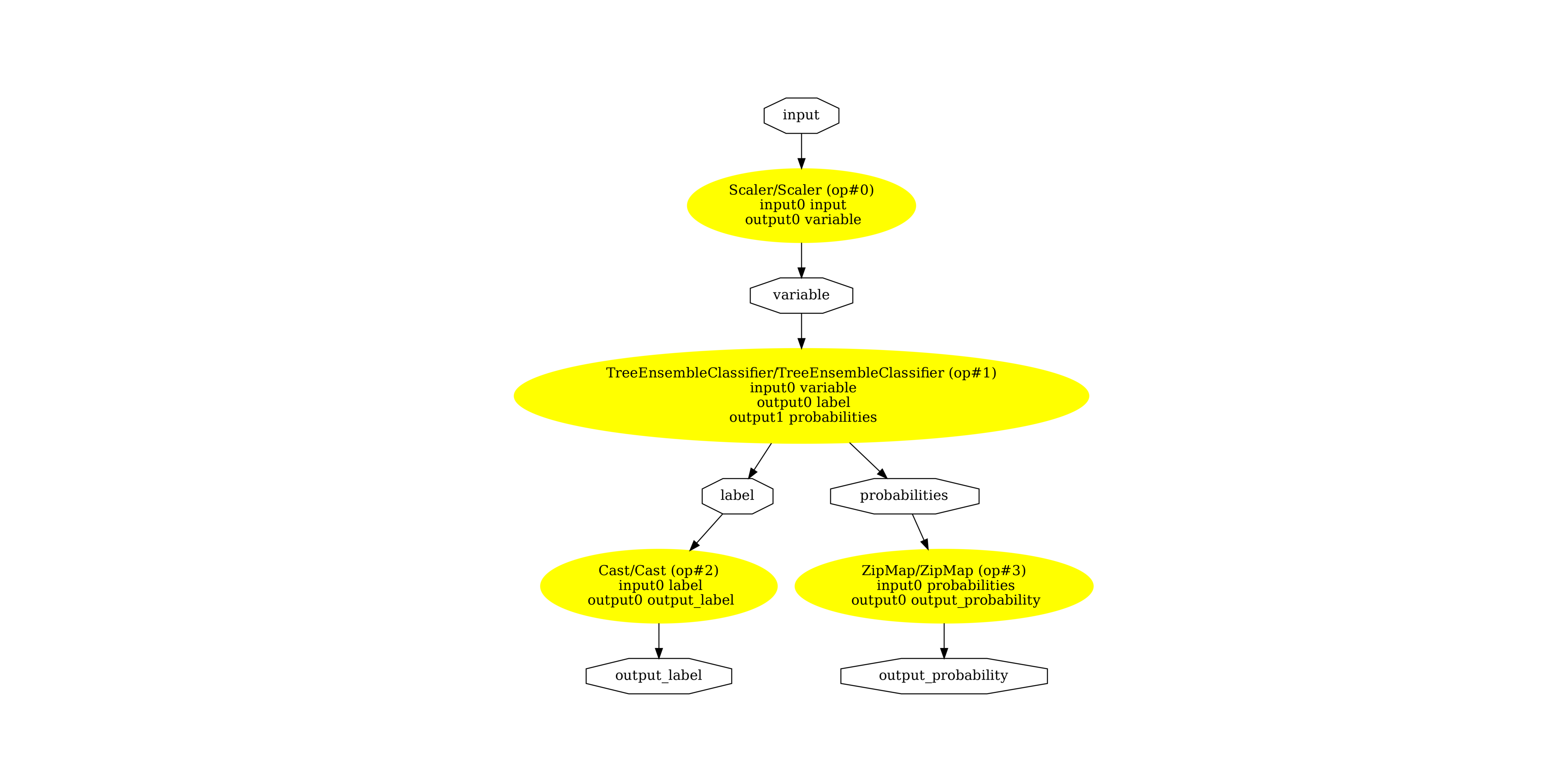

显示 ONNX 图¶

pydot_graph = GetPydotGraph(

model_onnx.graph,

name=model_onnx.graph.name,

rankdir="TB",

node_producer=GetOpNodeProducer(

"docstring", color="yellow", fillcolor="yellow", style="filled"

),

)

pydot_graph.write_dot("pipeline.dot")

os.system("dot -O -Gdpi=300 -Tpng pipeline.dot")

image = plt.imread("pipeline.dot.png")

fig, ax = plt.subplots(figsize=(40, 20))

ax.imshow(image)

ax.axis("off")

(np.float64(-0.5), np.float64(2485.5), np.float64(2558.5), np.float64(-0.5))

此示例使用的版本

print("numpy:", numpy.__version__)

print("scikit-learn:", sklearn.__version__)

print("onnx: ", onnx.__version__)

print("onnxruntime: ", rt.__version__)

print("skl2onnx: ", skl2onnx.__version__)

print("onnxmltools: ", onnxmltools.__version__)

print("xgboost: ", xgboost.__version__)

numpy: 2.3.1

scikit-learn: 1.6.1

onnx: 1.19.0

onnxruntime: 1.23.0

skl2onnx: 1.19.1

onnxmltools: 1.14.0

xgboost: 3.0.2

脚本总运行时间: (0 分钟 4.342 秒)